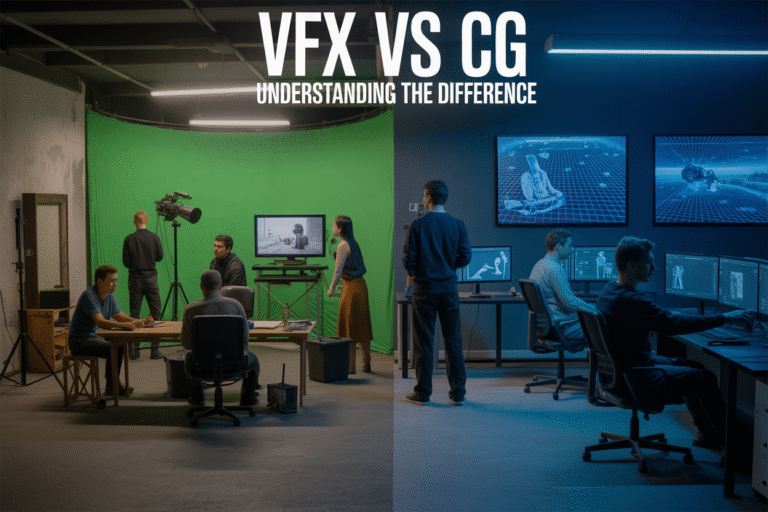

Ever stared at a mind-blowing movie scene and wondered, “How did they do that?” You’re not alone. Most people use “VFX” and “CG” interchangeably when talking about movie magic, but they’re actually completely different beasts.

I’m going to clear up this confusion once and for all, so you’ll never mix them up at your next film buff gathering.

Visual effects (VFX) and computer graphics (CG) are related but distinct components in modern filmmaking. One manipulates existing footage while the other creates something from scratch.

But here’s where it gets interesting – the line between them is blurring faster than you think, creating a new hybrid approach that’s revolutionizing everything from blockbusters to indie films.

Defining the Basics: VFX vs CG

What Visual Effects (VFX) Actually Encompasses

VFX isn’t just explosions and alien spaceships. It’s the art of manipulating imagery outside the context of a live action shot. Think of it as anything that couldn’t be captured with a regular camera on set.

When you watch a superhero flying through buildings or monsters destroying a city, that’s VFX at work. But it also includes subtle enhancements like removing unwanted objects from scenes, changing the weather, or extending sets digitally.

The bread and butter of VFX includes:

- Compositing (layering different visual elements)

- Matte painting (creating backgrounds)

- Particle effects (smoke, fire, water)

- Motion capture

- Rotoscoping (tracing over footage)

VFX is basically the entire process of integrating real footage with manipulated imagery to create scenes that look realistic but would be impossible, dangerous, or just too expensive to film.

Computer Graphics (CG) Explained Simply

CG is the creation of visual content using computers. Full stop.

While VFX is about manipulating or enhancing footage, CG is about creating something from scratch using computer technology. The dinosaurs in Jurassic Park? CG. Toy Story characters? Pure CG.

CG breaks down into a few key areas:

- 3D modeling (creating three-dimensional digital objects)

- Texturing (adding surface details)

- Rigging (creating a digital skeleton for movement)

- Animation (bringing the models to life)

- Rendering (processing the final image)

CG doesn’t need to start with any real-world footage – though it’s often combined with real footage through VFX processes.

Key Terminology You Need to Know

Trying to talk VFX and CG without knowing the lingo is like trying to order coffee in another language. You might get something, but probably not what you wanted.

Here’s your cheat sheet:

| Term | What It Actually Means |

|---|---|

| CGI | Computer-Generated Imagery – a subset of VFX |

| Rendering | The process of generating an image from a 2D or 3D model |

| Compositing | Combining visual elements from separate sources into one image |

| Pipeline | The workflow process from concept to final output |

| Dynamics | Simulation of physical systems like cloth, fluid, or hair |

| Matchmoving | Tracking the movement in a video so CG elements can be added |

| Green Screen | Filming actors against a solid color to replace with digital imagery |

Historical Evolution of Both Technologies

The journey from “The Abyss” to “Avatar” wasn’t overnight.

VFX started with practical effects – think miniatures, puppets, and optical illusions. Georges Méliès was pulling VFX tricks back in the 1890s with simple camera techniques.

The real game-changer came in 1973 with “Westworld” featuring the first 2D computer animation in a feature film. Then “Tron” (1982) pushed boundaries with extensive computer-generated sequences.

CG evolved from simple wire-frame models in the 1960s to the first 3D character (the stained-glass knight in “Young Sherlock Holmes,” 1985). “Jurassic Park” (1993) blew minds with photorealistic CG dinosaurs, while “Toy Story” (1995) proved feature-length CG animation was viable.

Today’s tech would make pioneers’ jaws drop. What took months now takes hours. What was impossible is now routine. And the line between real and digital? It’s blurrier than ever.

The Technical Differences That Matter

A. Tools and Software Used in Each Field

The tools divide between VFX and CG is like comparing paintbrushes to sculpting clay—related but serving different purposes.

VFX artists typically work with compositing software like Nuke, After Effects, and Fusion. These programs let them blend real footage with digital elements, creating seamless illusions. They’re manipulation tools at heart, designed to enhance what already exists.

CG specialists, on the other hand, create from scratch using 3D packages like Maya, 3ds Max, Blender, and Cinema 4D. Their world is about building digital assets that never existed in physical form.

There’s overlap too. Both camps use tools like Houdini for simulations and ZBrush for detailed modeling. But how they apply these tools fundamentally differs.

B. Workflow Comparisons Between VFX and CG

The workflows tell the real story:

| VFX Workflow | CG Workflow |

|---|---|

| Starts with shot footage | Starts with conceptual design |

| Focuses on enhancing existing material | Creates entirely from digital space |

| Post-production heavy | Production-centric |

| Layer-based processing | Scene and object manipulation |

| Camera matching critical | Camera creation from scratch |

CG pipelines build outward from nothing—modeling, texturing, rigging, and animating digital objects. VFX pipelines take existing footage and build inward, isolating elements to enhance, replace, or augment.

Think of CG as digital sculpture and VFX as digital surgery.

C. When VFX Incorporates CG Elements

This is where things get interesting. Modern blockbusters blend these disciplines constantly.

Take a superhero movie. The actor in costume? That’s practical. The energy beams shooting from their hands? CG elements. The way those elements interact with real environments? That’s VFX compositing.

CG becomes a subset of VFX when:

- Digital characters interact with live actors

- Impossible environments need to be created

- Physical effects would be dangerous or impractical

- Scale demands more than practical effects can deliver

The magic happens when you can’t tell where one ends and the other begins.

D. Processing Power Requirements

Both fields are computational beasts, but in different ways:

CG rendering is like a marathon—sustained heavy processing over long periods. A single frame of a CG character might take hours to render, with thousands of frames needed for a sequence.

VFX compositing is more like interval training—intense bursts of processing for specific effects, with more frequent preview capabilities.

This explains why VFX houses look like server farms. “Render gardens” with hundreds of machines crunching numbers 24/7 just to deliver a two-minute sequence for your summer blockbuster.

E. Real-time vs Rendered Graphics

The gap is closing here, but important distinctions remain.

Rendered graphics (traditional CG/VFX) prioritize quality over speed. A single frame might take hours but look photorealistic.

Real-time graphics (games, VR, LED wall virtual production) make compromises for immediacy. The results look impressive but lack the subtle details of fully rendered content.

Virtual production is bridging these worlds. LED walls displaying real-time CG environments for actors to perform against combine immediate feedback with the ability to enhance in post-production.

The latest Unreal Engine and Unity builds are blurring lines further. What once took overnight rendering can now happen in minutes or seconds—not quite real-time, but getting closer every day.

Applications Across Different Industries

A. Film and Television Production Techniques

Visual effects and computer graphics have completely transformed how we make movies and TV shows. VFX teams jump in during post-production to enhance or modify footage that’s already been shot. They’re the wizards who remove unwanted elements, add digital characters, or create explosive scenes that would be impossible (or insanely dangerous) to film in real life.

Meanwhile, CG artists build entire worlds from scratch. Think about those jaw-dropping alien landscapes in “Avatar” or the photo-realistic animals in “The Lion King” remake. That’s all CG work happening before a single frame is filmed.

Most modern productions use both techniques together. Marvel movies are perfect examples – they blend live actors with CG environments and use VFX to seamlessly stitch everything together.

B. Video Game Development Approaches

Gaming takes a different approach to VFX and CG than film does. In video games, CG forms the backbone of everything you see – from character models to environmental assets. The entire visual experience is rendered in real-time as you play.

VFX in games refers to the flashy, dynamic elements that respond to player actions – think spell effects, explosions, or environmental reactions. These effects need to be optimized differently than film VFX since they must render instantly without tanking your frame rate.

The line between VFX and CG gets especially blurry in games because everything exists in a digital environment. The key difference lies in whether something is a static asset (CG) or a dynamic, reactive element (VFX).

C. Architectural Visualization Methods

Architects have embraced both VFX and CG to revolutionize how they present designs to clients. CG allows them to create photorealistic renderings of buildings that don’t exist yet, complete with accurate lighting, materials, and scale.

VFX techniques come into play when architects want to show how their designs interact with the real world. They might superimpose a CG building onto actual footage of a site, or create fly-through animations that simulate how someone would experience moving through the space.

The commercial real estate industry particularly benefits from these technologies, using them to pre-sell properties before construction even begins.

D. Medical and Scientific Applications

The medical field uses CG to create detailed anatomical models for education, surgical planning, and research. These models can be rotated, sectioned, and examined from any angle – something impossible with traditional medical illustrations.

VFX techniques help visualize invisible processes, like showing how medications interact with cells or how diseases progress over time. Medical researchers use these visualizations to communicate complex concepts to colleagues, patients, and the public.

In scientific research, CG models help physicists visualize quantum mechanics, climatologists display weather patterns, and astronomers map cosmic phenomena. VFX brings these models to life, creating compelling visualizations that make abstract concepts tangible.

Career Pathways and Specializations

A. Essential Skills for VFX Artists

VFX artists aren’t just button-pushers – they’re digital magicians who bring impossible things to life. If you’re eyeing this career path, you’ll need to sharpen these key skills:

Technical foundation – Master industry-standard software like Nuke, After Effects, and Houdini. Each studio has its preferences, but knowing the big players gives you a solid starting point.

Compositional eye – You could have all the technical chops in the world, but without understanding how to integrate VFX elements seamlessly with live footage, your work will stick out like a sore thumb.

Problem-solving mindset – Directors will throw impossible challenges at you: “Make this explosion bigger but keep the same color palette” or “The actor’s performance was great but can we digitally remove that sneeze?” You’ll need to figure it out.

Collaboration skills – Gone are the days of the solo VFX artist. Modern pipelines involve dozens of specialists, and you’ll need to play nice with others.

B. Required Expertise for CG Specialists

CG specialists build digital worlds from scratch. Their toolkit looks quite different:

3D modeling mastery – Whether organic creatures or mechanical objects, you’ll need to sculpt digital forms with precision using tools like Maya, ZBrush, or Blender.

Animation fundamentals – Understanding the principles of movement, timing, and weight makes the difference between CG that feels alive versus robotic.

Lighting theory – Great CG artists are essentially digital photographers who understand how light behaves, creates mood, and sells realism.

Texturing skills – No CG asset is complete without convincing surface details. You’ll need to master creating everything from skin pores to weathered metal.

Rendering knowledge – Understanding how to optimize scenes for rendering and troubleshoot technical issues separates professionals from hobbyists.

C. Overlapping Competencies Worth Developing

Smart professionals develop skills that bridge both worlds:

Color theory – Both VFX and CG work demand understanding how colors interact, influence mood, and maintain continuity across scenes.

Real-world physics – Whether compositing explosions or animating character movement, understanding how things actually behave in reality helps create believable digital elements.

Pipeline awareness – Knowing how your work fits into the larger production workflow makes you invaluable in either discipline.

Coding fundamentals – Basic scripting skills (Python is popular) help automate repetitive tasks and solve technical challenges in both fields.

Artistic eye – The most technical artists still need core artistic sensibilities. Drawing skills, composition knowledge, and aesthetic judgment elevate work in both VFX and CG.

The good news? Many professionals migrate between these specialties throughout their careers, building impressive skill portfolios that make them adaptable as technology and industry demands evolve.

Future Trends Shaping Both Fields

AI-Driven Advancements in Visual Effects

The VFX industry is being completely transformed by AI, and it’s happening faster than anyone predicted. Just a few years ago, tools like rotoscoping and compositing required painstaking manual work. Now? AI systems can automatically track and mask objects across hundreds of frames in seconds.

Machine learning algorithms are getting scarily good at generating realistic digital humans. The uncanny valley is shrinking fast. Studios that once needed dozens of artists to create a photorealistic character can now achieve similar results with smaller teams and smarter tools.

Look at what’s happening with facial animation. Deep learning systems can now analyze an actor’s performance and translate those subtle expressions to digital characters without those funny dots all over their face. The tech isn’t perfect yet, but it’s improving at breakneck speed.

Real-time CG Technologies on the Rise

Remember when rendering a single frame of high-quality CG could take hours? That world is disappearing. Real-time rendering engines like Unreal and Unity have crashed the party, and they’re changing everything.

Game engines have broken out of their gaming boxes and stormed into film and TV production. Directors can now see final-quality visuals on set instead of waiting weeks for rendering. It’s like jumping from horse-drawn carriages straight to sports cars.

The gap between pre-rendered and real-time CG is closing fast. Just check out what Epic did with their MetaHuman Creator – characters that would’ve taken weeks to build can now be created in hours, with realistic hair, skin, and clothing that moves naturally in real-time.

Virtual Production’s Impact on Traditional Processes

Virtual production has flipped the traditional filmmaking pipeline on its head. The old way? Shoot first, add VFX later. The new way? Create your digital environment first, then film actors directly interacting with it.

LED volumes (those massive walls of screens) are becoming the new green screens. Shows like “The Mandalorian” proved you can capture final pixels in-camera, with realistic lighting baked in. No more “fix it in post” headaches.

This shift is blurring job roles like never before. Cinematographers now need to understand game engines. VFX artists need to think like production designers. The lines between pre-production, production, and post-production are melting away.

Emerging Hardware Changing the Game

Hardware innovations are supercharging both VFX and CG. GPUs keep getting more powerful while using less power. Cloud rendering farms mean small studios can access thousands of processors on demand.

Motion capture has gone wireless and portable. Remember those massive mo-cap stages with dozens of cameras? Now indie creators can capture professional-grade animation with just a few sensors and a laptop.

Extended reality (XR) headsets are creating new ways to work. Artists can sculpt 3D models in virtual space, walk around them, and make adjustments with hand gestures instead of clicking a mouse. It’s making the creative process more intuitive and fluid than ever before.

The visual entertainment industry’s foundation lies in both VFX and CG technologies, each with distinct technical processes, applications, and career specializations. While VFX encompasses the integration of live footage with manipulated imagery, CG represents the broader field of digitally created content. These technologies continue to transform industries ranging from film and gaming to architecture and healthcare, creating diverse career opportunities for specialists.

As technology advances, we can expect to see increasingly blurred lines between VFX and CG, with real-time rendering, AI-driven solutions, and virtual production becoming standard practices. Whether you’re considering a career in these fields or simply appreciating the artistry behind your favorite visual media, understanding the nuances between VFX and CG provides valuable insight into the digital creation process that shapes our entertainment experiences.

FAQ

What Is the Difference Between VFX and CGI?

The Difference Between VFX and CGI lies in their purpose—VFX includes all visual effects added in post-production, while CGI focuses on digitally created images or 3D elements.

Why Should You Know the Difference Between VFX and CGI?

Knowing the Difference Between VFX and CGI helps professionals and students choose the right tools, plan production pipelines, and collaborate more effectively in filmmaking.

Is CGI a Part of VFX or Something Different?

Yes, CGI is a part of VFX. The Difference Between VFX and CGI is that CGI deals with 3D creation, while VFX includes compositing, green screen, and integrating CGI with real footage.

How Does the Difference Between VFX and CGI Affect Filmmaking?

Understanding the Difference Between VFX and CGI allows filmmakers to budget, schedule, and assign teams accurately during the production and post-production stages.

Which Software Tools Are Used in VFX and CGI?

The Difference Between VFX and CGI also lies in tools—CGI often uses Maya or Blender for 3D modeling, while VFX uses After Effects, Nuke, or Fusion for compositing and integration.

Can You Work in VFX Without Learning CGI?

Yes, you can work in VFX without deep CGI knowledge. The Difference Between VFX and CGI is that VFX includes many roles like rotoscoping, tracking, and color grading.

Are CGI and VFX Used Together in Movies?

Absolutely! The Difference Between VFX and CGI is that CGI creates digital content, while VFX blends it into real-world scenes—both are often used together to create seamless visuals.

Does the Difference Between VFX and CGI Matter in Animation?

Yes. While full CGI animation uses entirely computer-generated scenes, VFX may add additional effects in hybrid animations. So the Difference Between VFX and CGI still applies.

Is the Learning Curve Different for VFX and CGI?

Yes. The Difference Between VFX and CGI means CGI focuses on 3D skills like modeling and rigging, while VFX involves tracking, keying, and digital compositing techniques.

How Do Job Roles Reflect the Difference Between VFX and CGI?

VFX roles include compositors, roto artists, and match movers. CGI roles include 3D artists, animators, and render specialists. That reflects the Difference Between VFX and CGI in the industry.

Are There Career Benefits to Understanding the Difference Between VFX and CGI?

Definitely. Professionals who understand the Difference Between VFX and CGI are better prepared for specialized roles and team collaboration across production pipelines.

Can a Project Use Only VFX or Only CGI?

Yes. A fantasy movie may use only CGI, while a live-action film may only need VFX for enhancements. The Difference Between VFX and CGI lets creators choose the right visual strategy.

Do You Need Different Hardware for VFX and CGI?

Generally, CGI demands more GPU power for 3D rendering. VFX tasks like compositing also need strong systems, but the Difference Between VFX and CGI can affect hardware needs.

How Is the Difference Between VFX and CGI Taught in Courses?

Most VFX courses include both areas. They first explain the Difference Between VFX and CGI, then teach relevant tools like Blender, After Effects, or Nuke based on specialization.

What Are Common Misconceptions About the Difference Between VFX and CGI?

A common myth is that VFX and CGI are the same. The truth is, the Difference Between VFX and CGI lies in scope—VFX includes all enhancements, CGI is just one digital toolset.